This may often happen in a nested vSAN environments in our home labs. We play with networking, remove vSAN kernels, put vCenter down, remove hosts from vSAN cluster…and there is this one step too far that results in having all our objects inaccessible, including vCenter. To be able to access the data (it is stored securely on the disk groups) we need to re-create our cluster back again.

How this can be done without vCenter? vSAN works fine when vCenter is down, but what happens when vCenter IS actually down and cluster is broken or needs to be reconfigured?

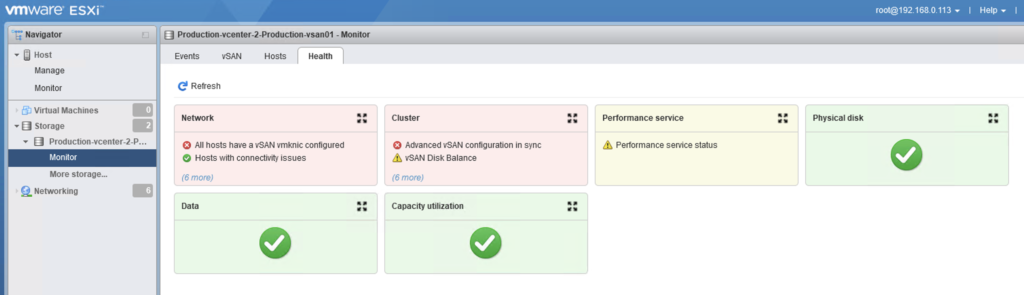

vSAN Health in ESXi web interface is a good start to asses the “damage”. If all of the hosts are isolated, all of them will be masters of their own single-node vSAN. If we do not see any other hosts in Hosts tab, this means the host does not see any of its neighbors in the vSAN network.

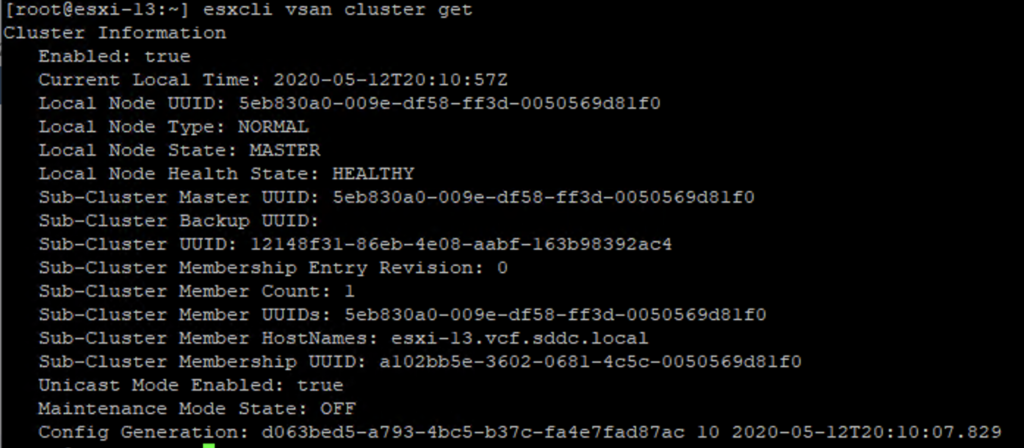

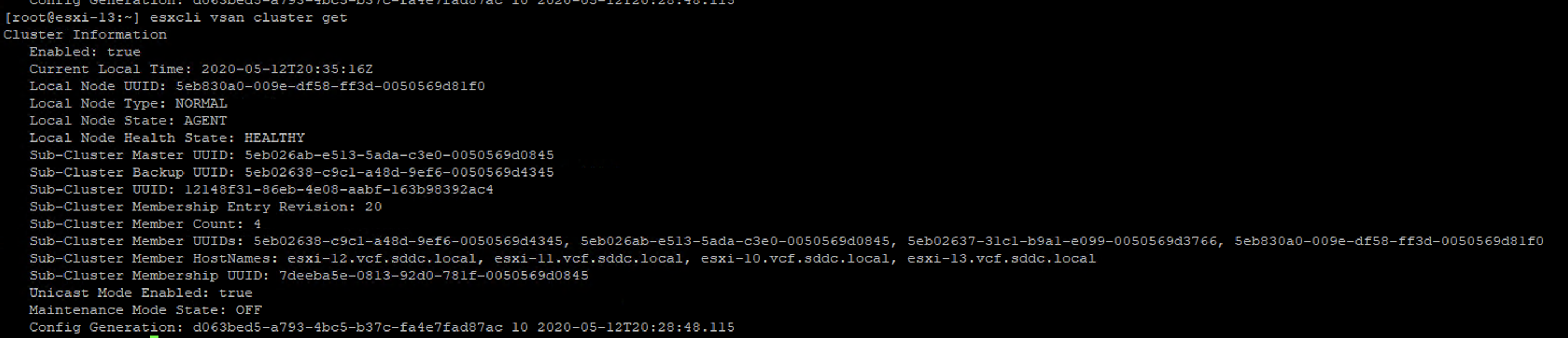

What we could do next is to ssh to all esxi hosts and check the cluster status with the command: esxcli vsan cluster get.

This will confirm that hosts are isolated or will help us to determine how the cluster is partitioned.

vmkping -I vmkX x.x.x.x will always help us to check if this is a network problem of the nested host. In this scenario we assume network works fine, pings are successful but nested hosts somehow cannot form the cluster.

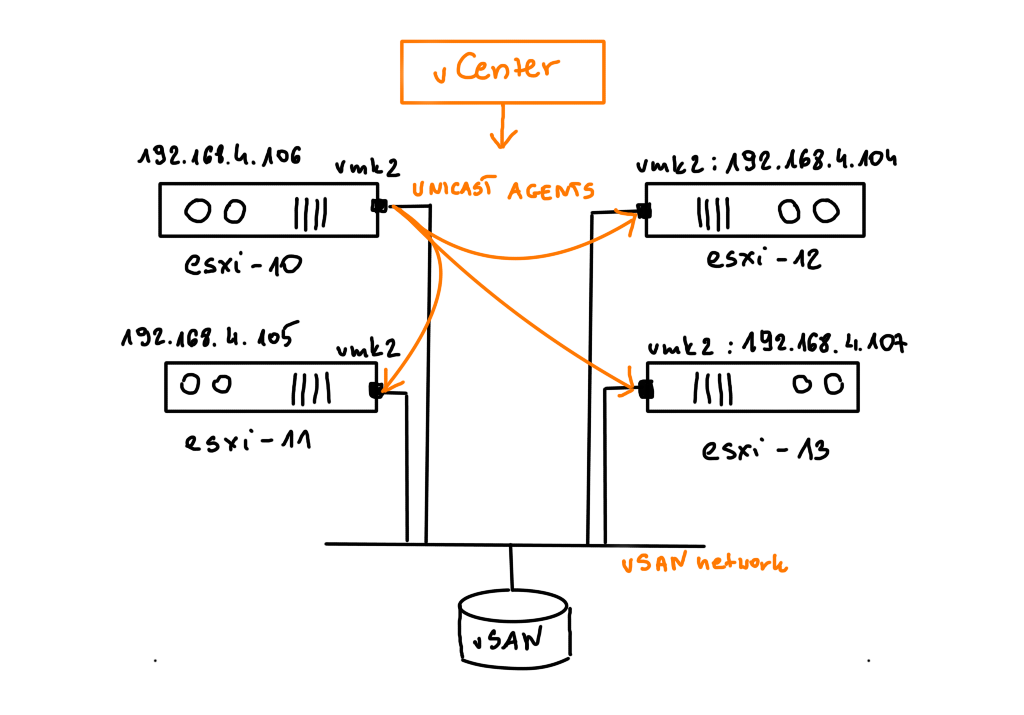

It is vCenter’s role to inform hosts about their vSAN neighbors when we form the cluster but in this case we need to do this manually.

We need to “inform” hosts about their neighborhood (vSAN uses unicast). On the screen below we see 4 vSAN 7.0 hosts with vmk2 tagged with vsan traffic.

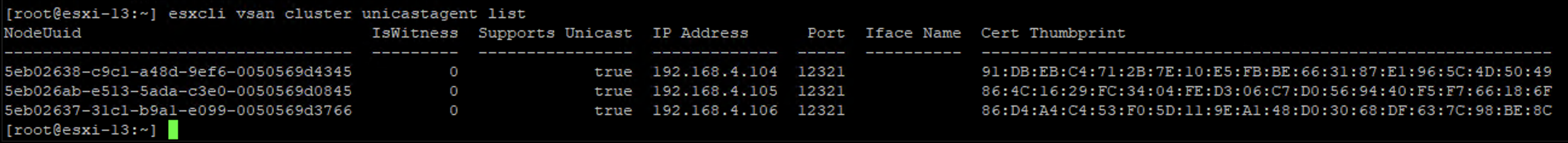

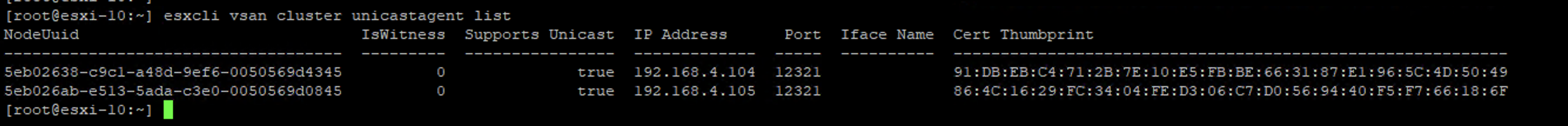

Every host should have a list of other host in a cluster. We can check it using esxcli vsan cluster unicastagent list.

If the cluster runs fine, this command shows the complete list of the neighbors from the single host perspective. Here we can see esxi-13 seeing all three other hosts on their vSAN network on vmk2.

On the screen below we can see that the host esxi-10 sees only esxi-11 and esxi-12.

Assuming network is fine, vCenter is down and won’t help us with this issue, we need to fill gaps in the unicastagent lists manually. Just remember, never add the IP of the host whose table is being configured. Here is the command we have to use:

esxcli vsan cluster unicastagent add -t node -u <Host_UUID> -U true -a <Host_VSAN_IP> -p 12321

for esxi-10 we have to have esxi-11,12 & 13 on the list, for esxi-11 it will be esxi-10, 12, 13 etc.

If the lists are complete, cluster should instantly be recreated and objects available again. Check out the sub-cluster member count – it was 1 and now it is 4.

The cluster if formed back again and vCenter should be starting.