The VMware’s Virtually Speaking Podcast needs no introduction. This podcast accompanies me usually on my business trips not only because it ties to my storage-focused job. It is entertaining, inspiring and it is just great to be able to hear our overseas subject matter experts.

“vSAN Off-Road” is the title of one of my favourite Virtually Speaking Podcast episodes from 2017. It is about vSAN being the most flexible storage…but there has to be a limit to this flexibility, hasn’t it? Speakers discuss what happens when we wander off the HCL and recommendation path…or decide to run a single cluster on servers coming from different vendors.

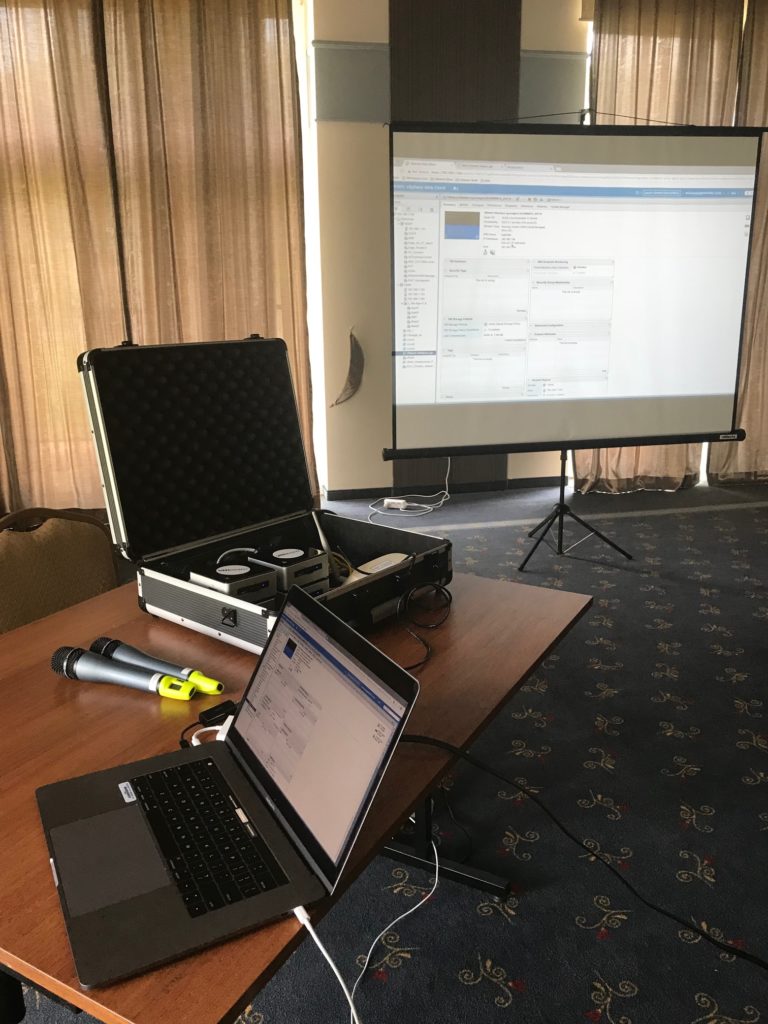

vSAN can run on “almost” every possible server that vSphere can run on. On the photo below you can see our vSAN suitcase that usually has a place under my desk in our office but also travels with me during workshops. vSAN is installed on 3 Intel NUCs (one SSD as a cache tier and second SSD as capacity tier) and the fourth NUC is a single ESXI host with management VMs and apps like vCenter, DNS, NSX controller, Connection Server for VDIs etc. The suitcase vSAN cluster has a datastore size of around 1,2 TBs and has deduplication on.

This is not a supported hardware configuration, but this is just a demo. In real-life situations I come across a lot of off the road vSAN questions. I decided to share with you my personal FAQ.

- Can we run a vSAN cluster on hosts coming from different vendors or different generations of hosts?

Technically, yes. Although I can imagine troubleshooting could be difficult in such a situations. There are couple of things you have to consider. One of the most important is the compute layer. Combining different hosts = combining different CPUs. For the vMotion this can be a problem, you would probably need to enable EVC. Here is a nice post about it.

“If you would add a newer host to the cluster, containing newer CPU packages, EVC would potentially hide the new CPU instructions to the virtual machines. By doing so, EVC ensures that all virtual machines in the cluster are running on the same CPU instructions allowing for virtual machines to be live migrated (vMotion) between the ESXi hosts.“

When mixing different hosts in vSAN cluster, it is important to have similar CPU and RAM resources, so one host will not slow down others.

- What about mixing different type of hosts: all flash with hybrid?

This is not supported.

- Can an ESXi host have access both to vSAN datastore and VMFS datastore on SAN?

Yes, of course it can. vSAN does not care about other datastores. Host can have a datastore on a local disk, access to LUN on some storage array, iSCSI datastore and vSAN at the same time. Although it might get difficult to manage and automate and you will definitely need more ports and HBA adapters.

- Is it possible to scale UP a host adding disks that have different sizes?

Here you will find an official statement.

“If the components are no longer available to purchase try to add equal quantities of larger and faster devices. For example, as 200GB SSDs become more difficult to find, adding a comparable 400GB SSD should not negatively impact performance.“

- Can hosts have different number of disk groups?

They can. The challenge is that some of the host will aggregate more storage traffic than others. VMDK components will not be equally balanced over the cluster.

- Can a vSAN cluster have compute only nodes?

Yes. You still need to licence those hosts with vSAN license and configure vSAN kernel for them. There is no official statement on the compute nodes to standard nodes ratio. It all depends on a workload. Having just 3 vSAN nodes serving 10 diskless hosts hosting compute intensive apps might require a good planning, especially on the networking part.