When we want to migrate our existing (on-prem) VMware workloads to a cloud we have two options. It can be a lift and shift migration to a VMware-As-A-Service solution like GCVE where VMware HCX could be used to guarantee a high performance of migrations and a minimum or no downtime during such a process. The other option is to convert from VMware to cloud native format like Google Compute Engine. This article briefly covers the second approach.

The VM format conversion sounds scary but it is actually a very easy process if you are using Google Migrate for Compute (M4C) service.

Imagine you have a VM (or a bunch them) that runs in a vSphere environment (on-prem or on GCVE) and it uses an OS system supported by M4C. In a matter of let’s say an hour (depending on the VM size and its data churn and also assuming you have a connectivity between your on-prem VMware cluster and a VPC where your M4C service is running or Private Service Connection to your GCVE cluster) you can have it running on Google Cloud.

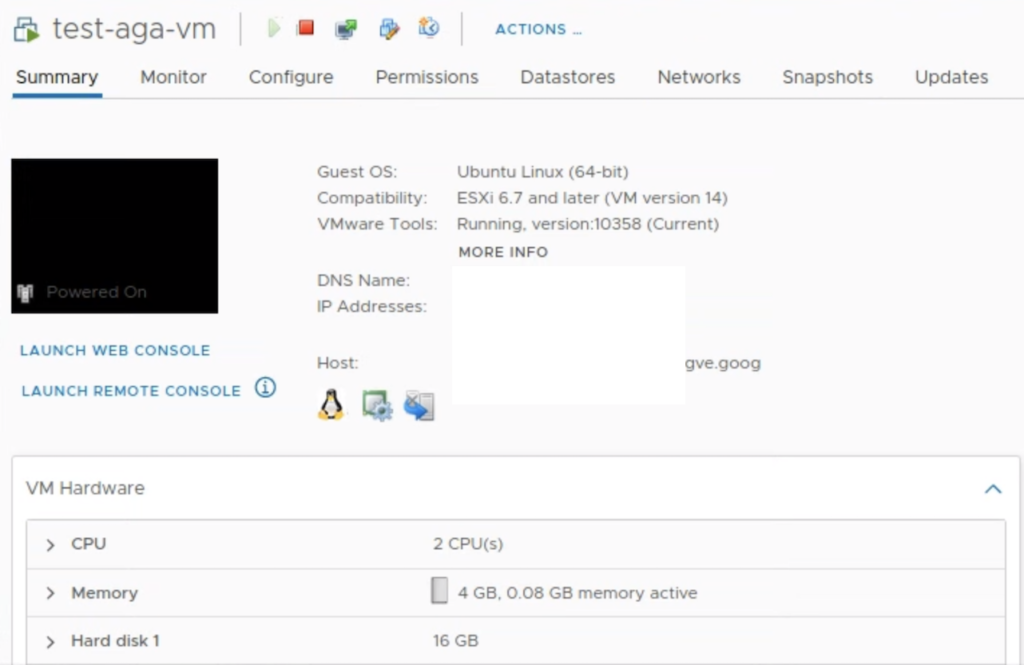

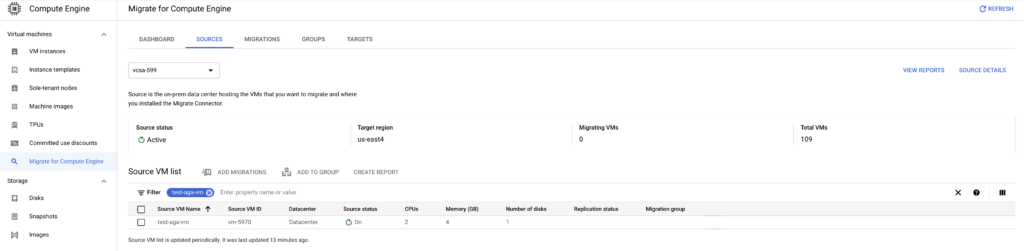

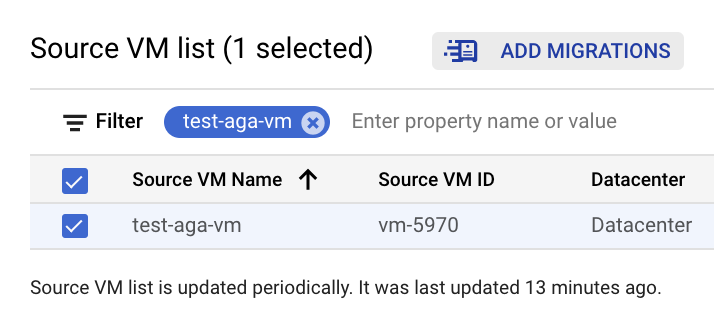

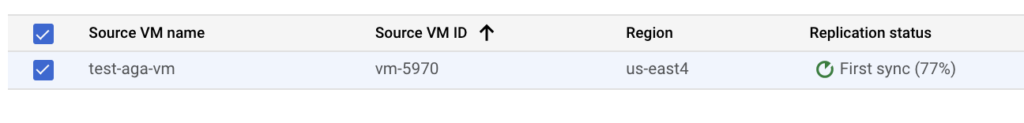

I will use test-aga-vm that runs on Ubuntu in my example.

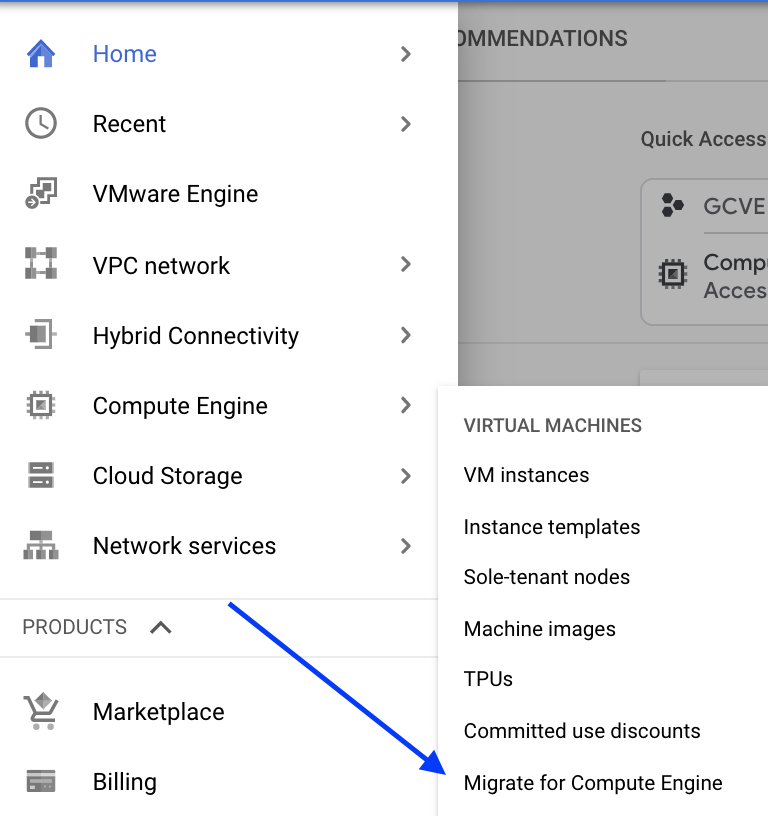

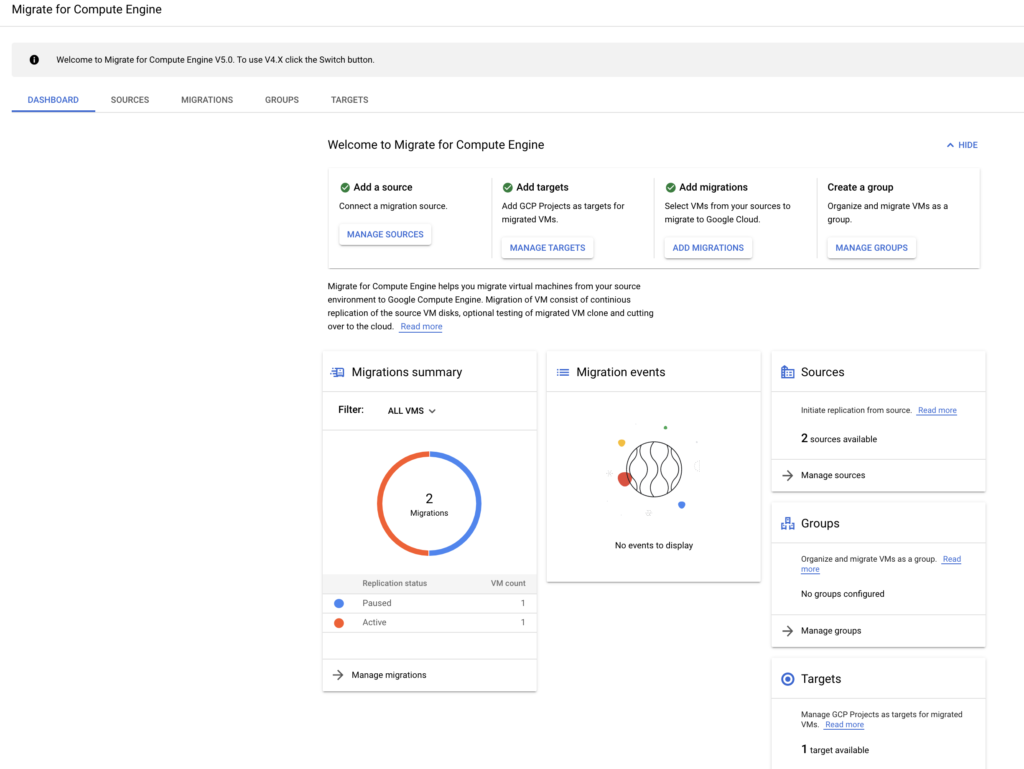

What you need to do is to setup you M4C environment following official Google’s documentation. One of the steps is to enable Migrate for Compute API after which you will find your M4C dashboard is under “Compute Engine”

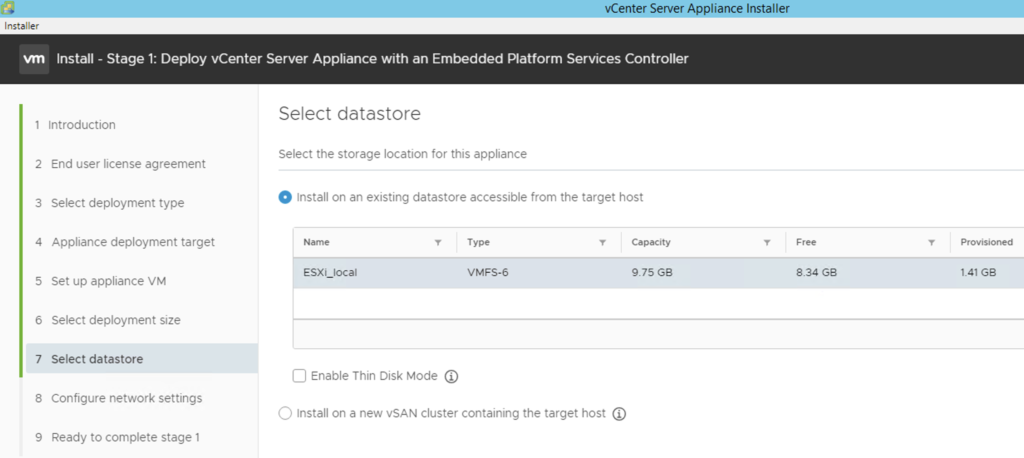

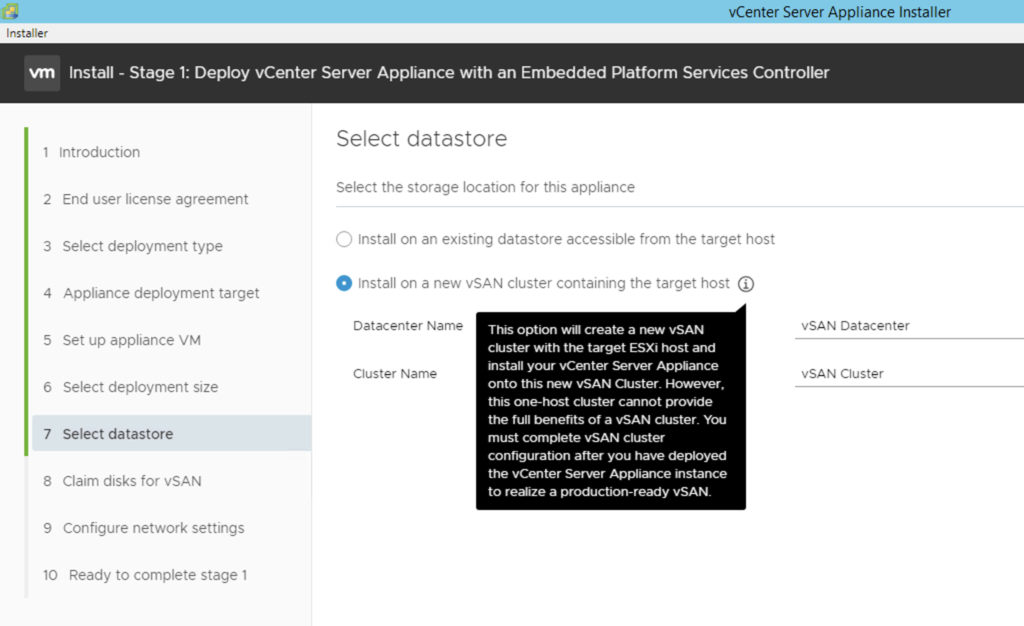

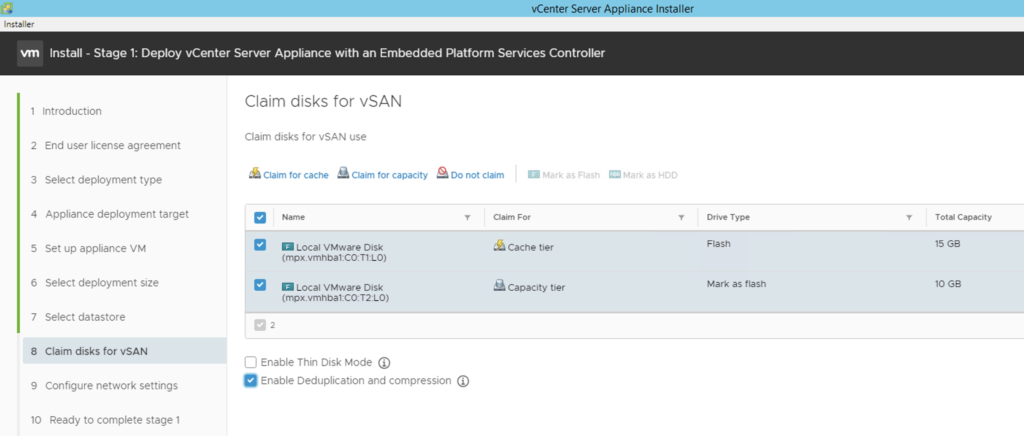

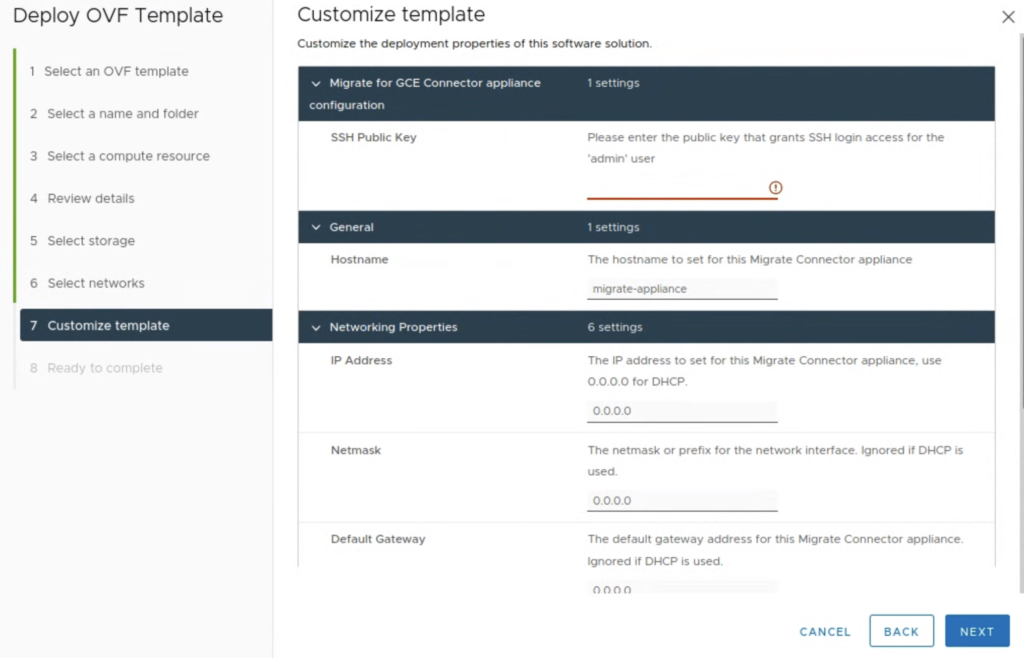

When everything is set on GCP side (APIs and permissions), the next step is to deploy M4C appliance on your vSphere cluster. The link to the most recent OVA version is included in the documentation.

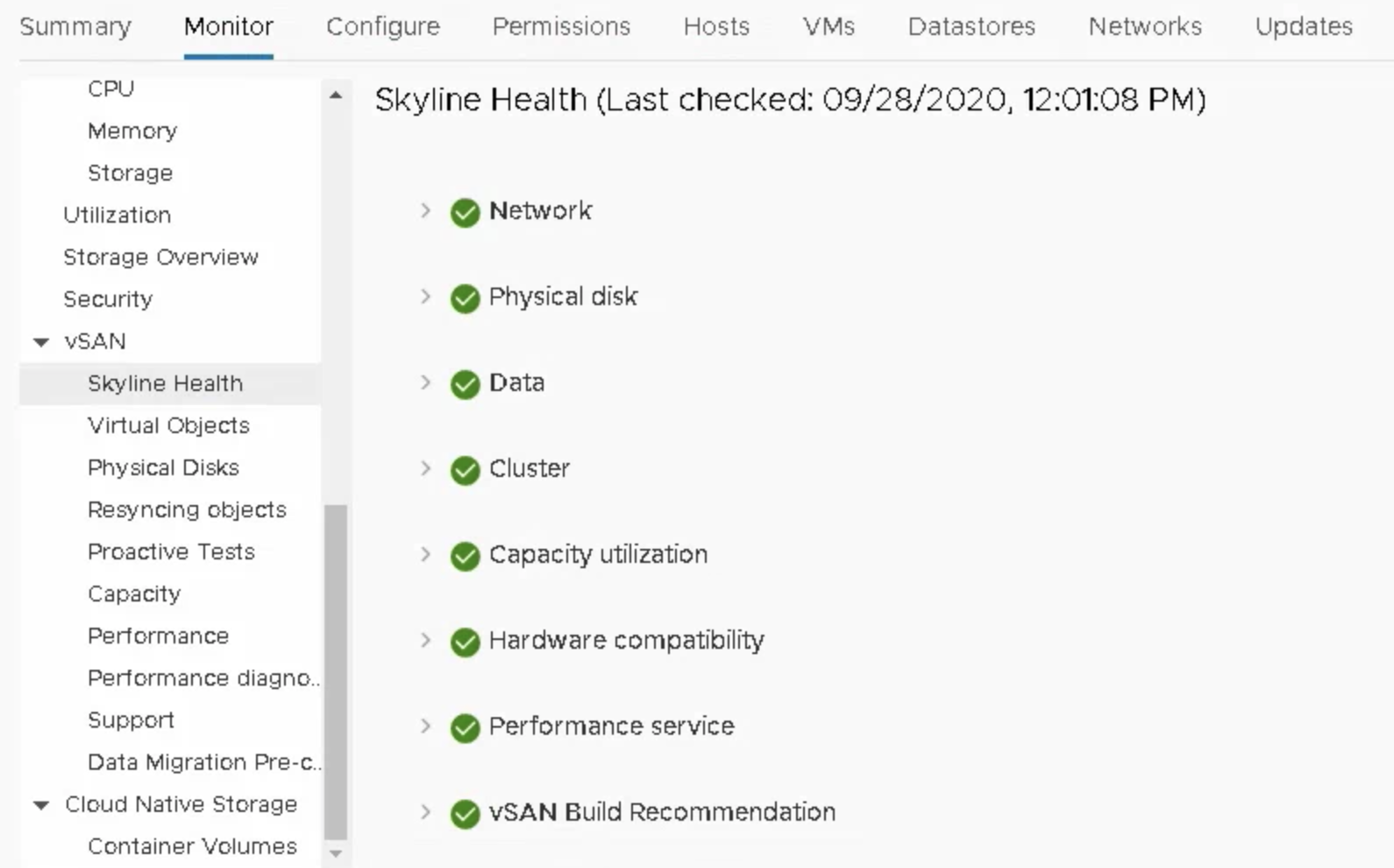

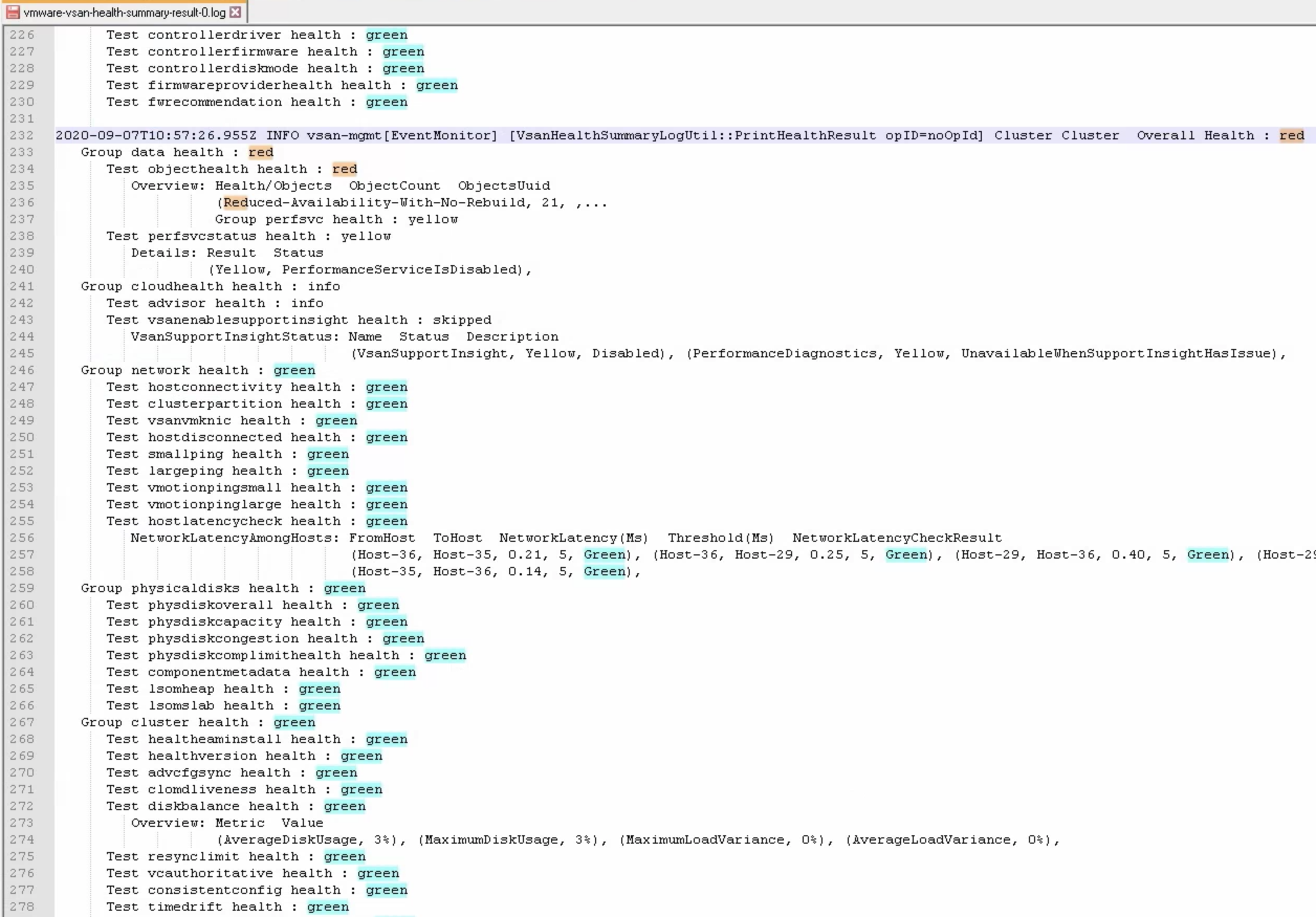

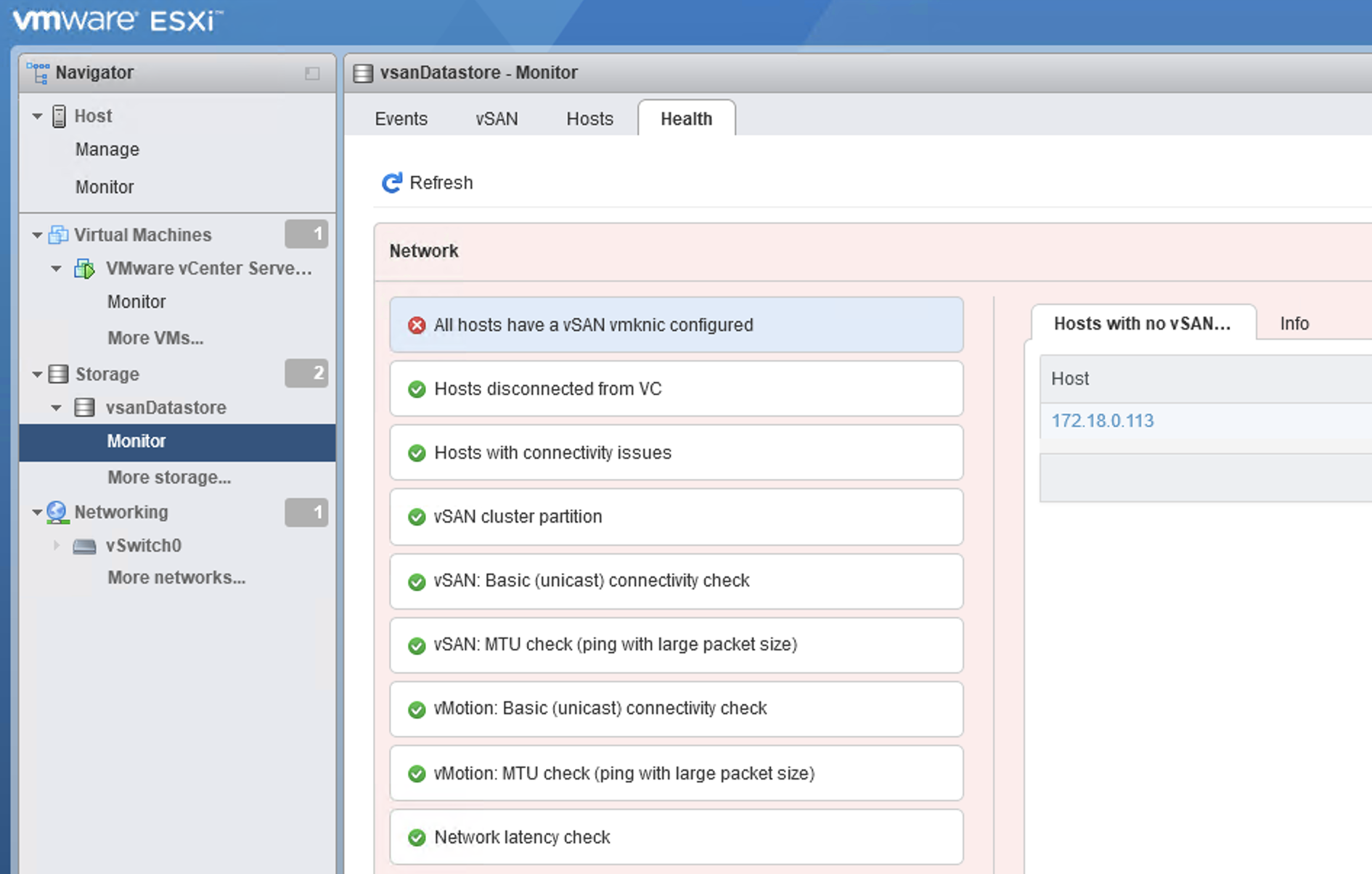

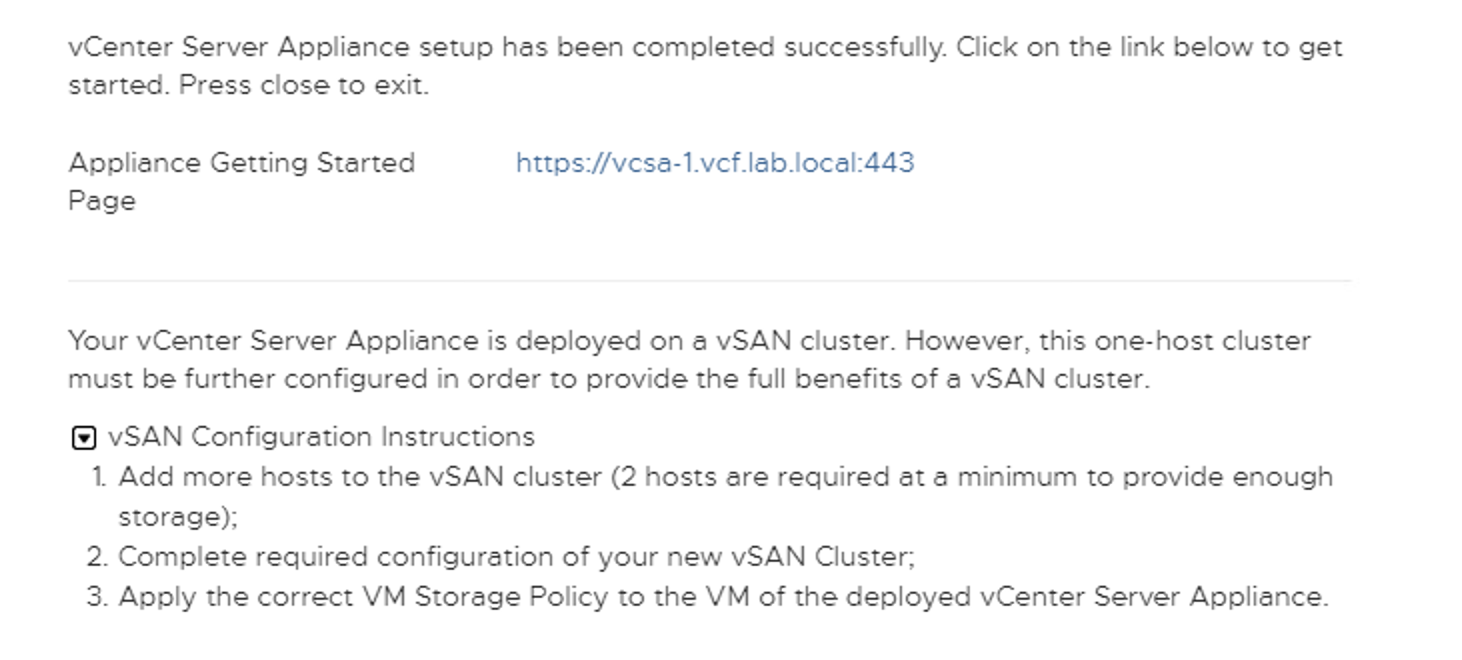

The most important part when deploying OVA is to deploy it in a network segment that can access googleapis.com and your DNS. The M4C appliance will have to be able to resolve your vCenter FQDN and also FQDNs of all ESXis in your cluster. It is ok to run it on a vSAN cluster.

When a M4C appliance is ready the only way to ssh to it is via its SSH Private Key. The SSH Public Key has to be provided when M4C is deployed.

After a M4C appliance is powered on, it has to be registered in your project. The registration process looks like this:

admin@migrate-appliance:/m4c/OSS$ m4c registerPlease enter vCenter host address: xxx.us-east4.gve.googvCenter server SSL certificate fingerprint is xxx Do you approve? [Y/n]YPlease enter vCenter account name to be used by this appliance: solution-user-05@gve.localPlease enter vCenter account password:xxx

vSphere credentials verified

Please visit this URL to authorize this application: https://accounts.google.com/o/xxxEnter the authorization code: xxxThis Migrate Connector was registered to Source xxx in Project yyy

Please select project:

xxx

List is longer than 10, truncating list. Please select or type project.

xxxPlease select region:

1. asia-east1

2. asia-south1

...

List is longer than 10, truncating list. Please select or type region.

us-east4

Please supply new vSphere source name (vSphere source format must be only lowercase letters, digits, and hyphens and have a length between 6 and 63) : vcsa-599

Creating new source…

Please select service account: ("new" to create)

1. new

2. xxx

new

Please supply new service account name (service account format must be only lowercase letters, digits, and hyphens and have a length between 6 and 3 0): migration

Waiting for the Migrate Connector to become active. This may take several minutes…

Registration completed

If you wan to check the status of the appliance, use m4c status.

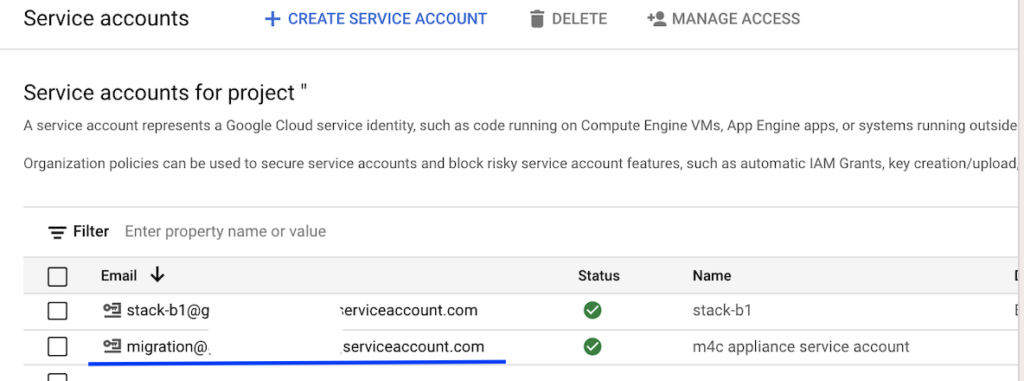

After the successful registration you will find new service accounts were created to support migrations and OS customisations.

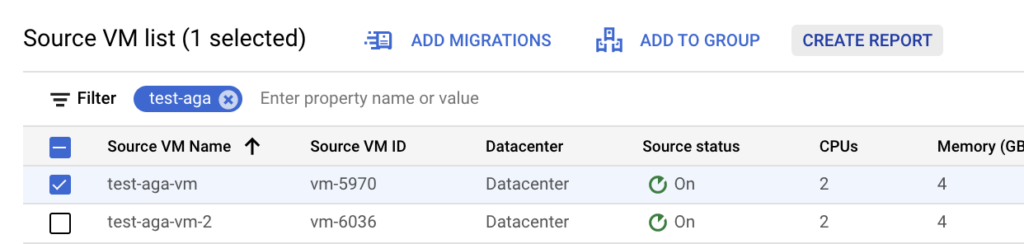

After a successful registration you will also see in the Source tab a list of all VMs under a registered vCenter.

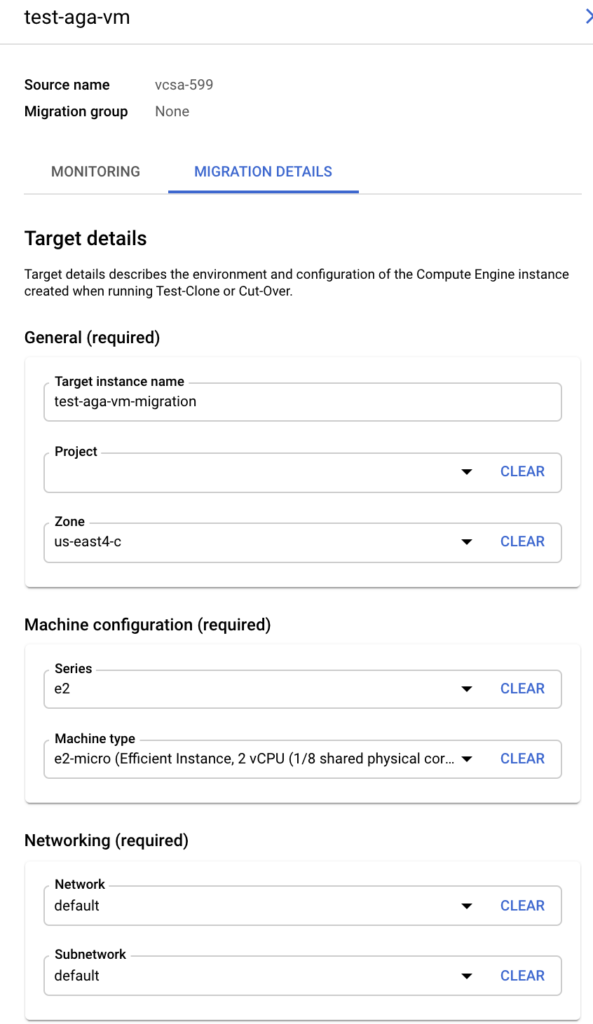

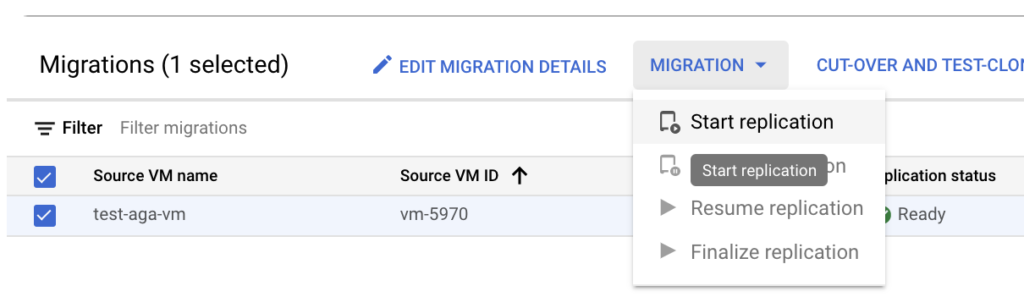

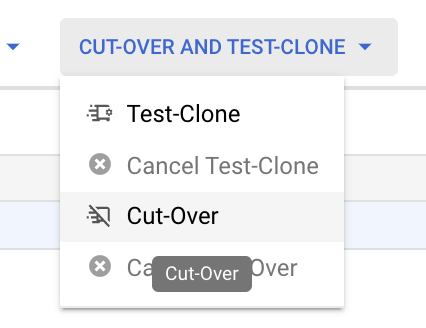

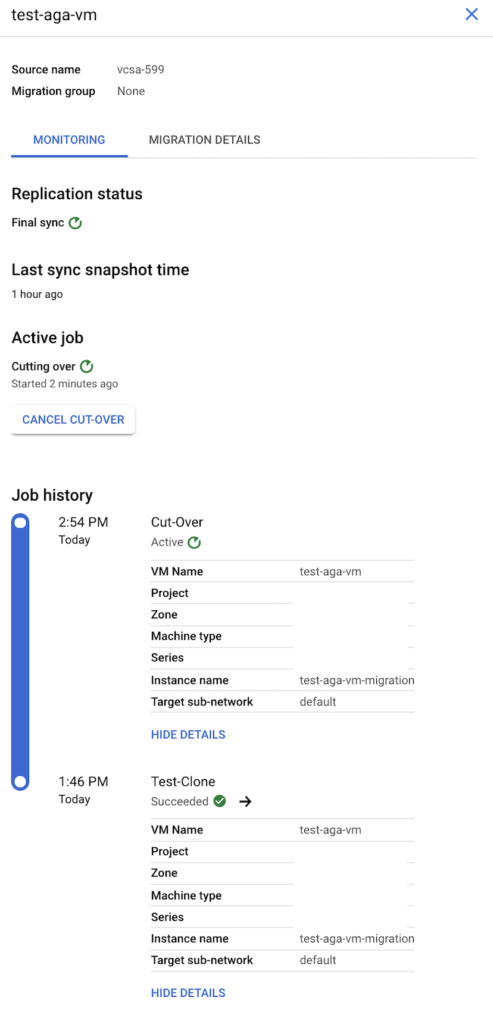

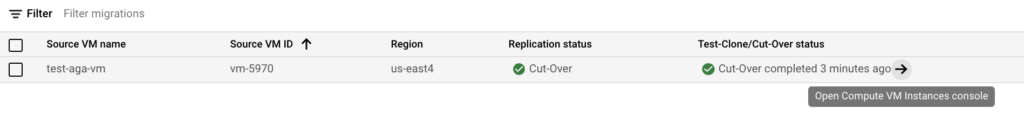

You can start a VM replication now. After an initial sync you can test-clone your VM (to a sandbox VPC for example) or do a cut-over. For those two actions you need to provide Migration Details for a VM. The M4C service needs to know where do you want your replica to be created, what would be a machine type you want to use when you do a test clone or cut-over and how frequent the replication cycle should be. It looks like this service could be used not only for migrations but also as a DR.

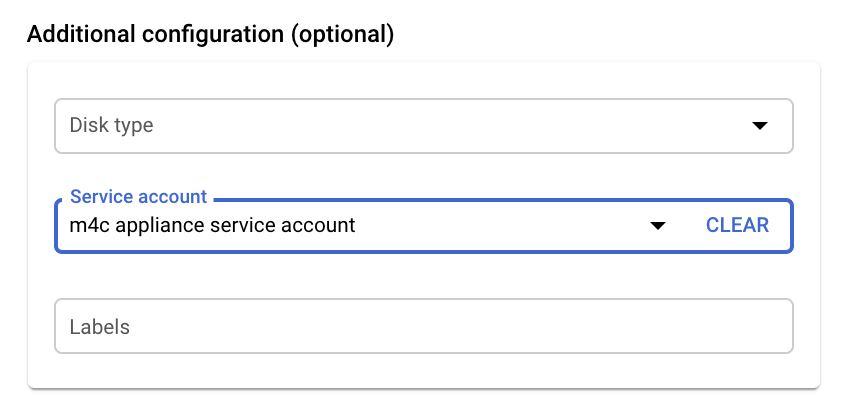

If you want to use a service account, this is where you configure it:

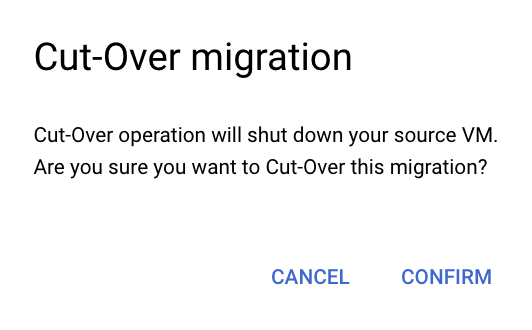

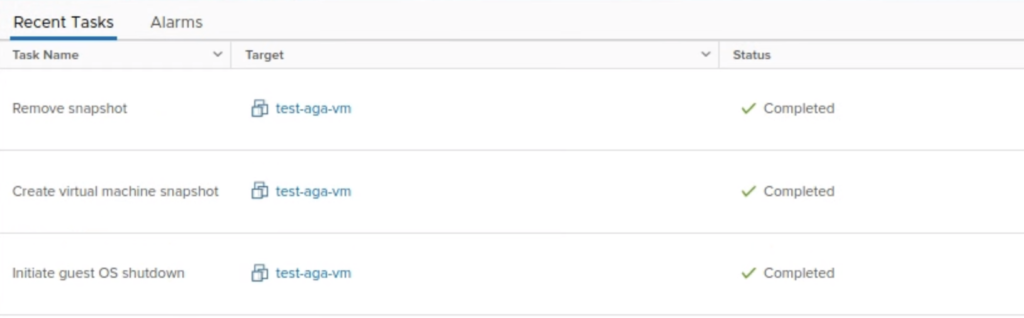

A Cut-over will never delete an original VM on a vSphere side, it will only power it off. Other activities I observed during replication and cut-over were VM snapshots. M4C will not reconfigure a VM that runs on vSphere. In case a cut-over fails, you can always power on an original VM.

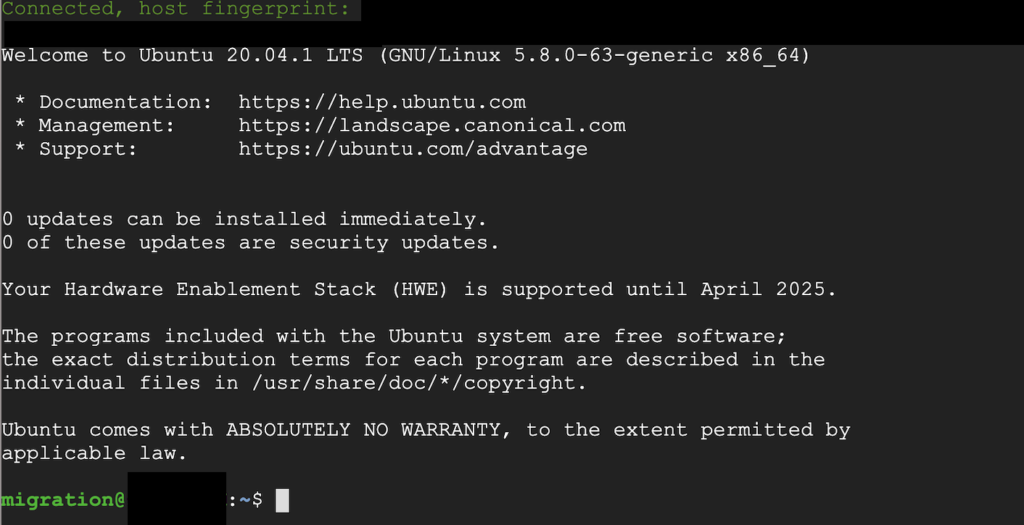

When my cut-over task was completed I could ssh into your my migrated VM from a Cloud Shell to evaluate it.

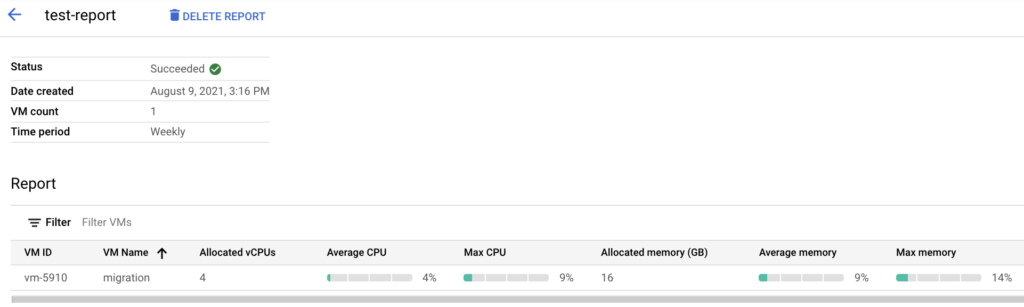

M4C does a lot of OS adaptations when converting the format from VMware to Compute Engine like uninstalling VMware Tools, configuring NIC to use DHCP, installing Google packages etc. A new VM will have new IP addresses that are provided by your VPC. It can also use different CPU and RAM parameters than the ones configured on vSphere, so a migration can also be a good moment to evaluate and resize your VM. M4C also offers a VM utilization report that you can run for a longer period of time on VMs that are still running on vSphere to right size them for a migration.