vSAN uses the concept Fault Domains to group hosts into pools. Each FD can have one or more ESXi hosts. Usually it is used to protect the cluster against a rack or a site failure. vSAN will never place components of the same object the same FD. If the whole FD fails ( a top of the rack switch failure, a site disconnection), we will still have a majority of votes for the object to be avaialbe.

If we don’t configure any FD in vCenter, every ESXi host will become a kind of FD, because we will never have components of the same object on the same host…even if the host has more than one disk group. So the smallest FD is the host itself.

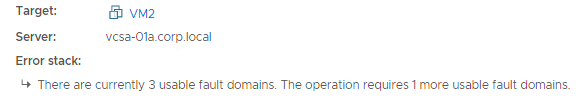

In vSAN the smallest number of Fault Domains is 3 and this config will protect against a single FD failure. To protect against two FD failures using MIRROR, we will need 2n+1=5 Fault Domains, for 3 failures protection with MIRROR we will need 7 FDs.

It is best practise to place the same number of hosts into every FD.

FDs are configured per vSAN cluster.

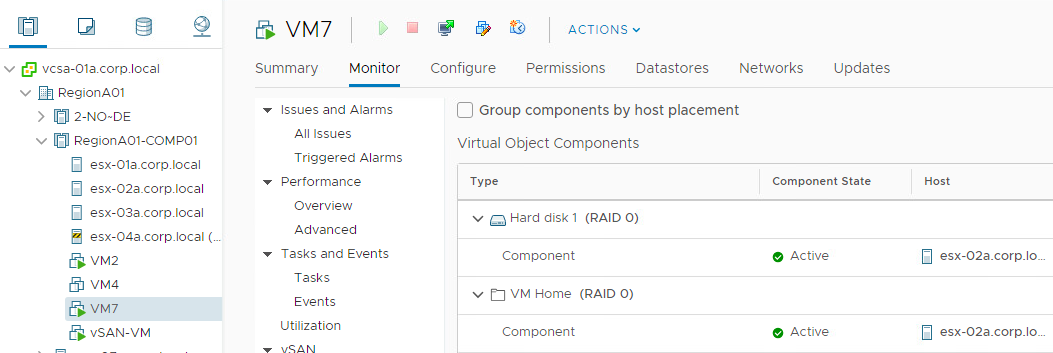

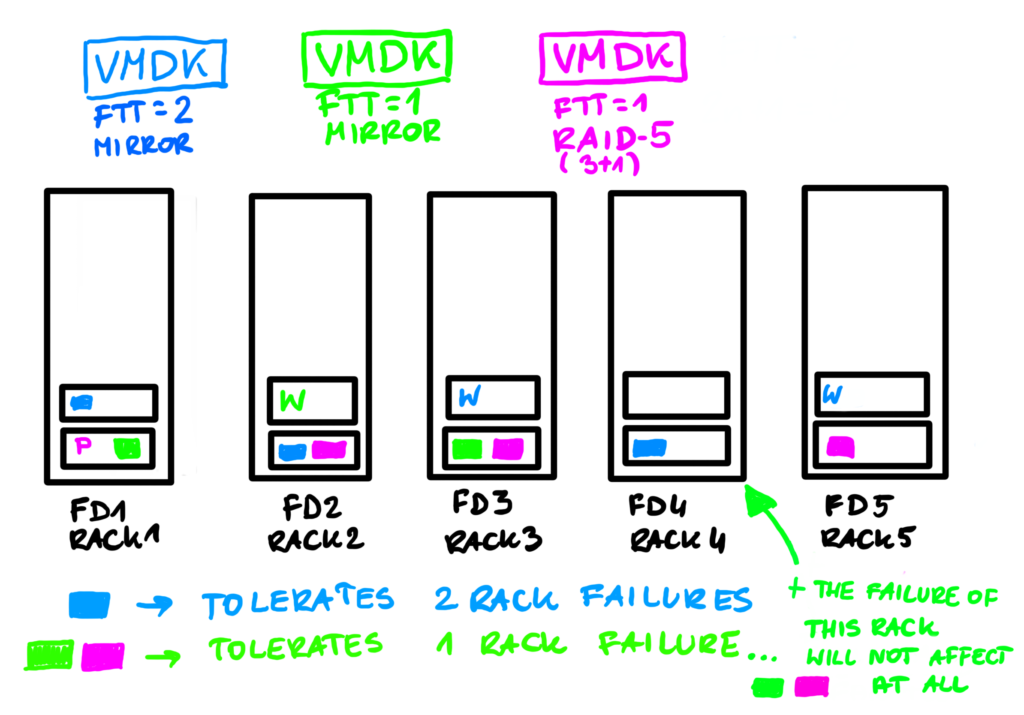

Example 1

Here is a simple example. Imagine we have 5 racks and 10 ESXi hosts. We could place two ESXi per rack and this way we could have 5 Fault Domains.

Blue VMDK uses SPBM Policy FTT=2 mirror and its components are placed among 5 FDs (3x VMDK + 2x witness). Green VMDK uses FTT=1 mirror so it requires less FD than available and won’t occupy all the racks, it will use only 3. Pink VMDK uses FTT-1 RAID-5 (3x data+1x parity) and for that only 4 FDs are needed.

vSAN distributes components automaticaly, so the concept of FD might be a way to influence where the components are placed.

In this example quite by accident, the FD4 got only components of the blue VMDK. Even if green and pink VMDKs are protected against a single rack failure, failure of FD4 will not affect them at all.

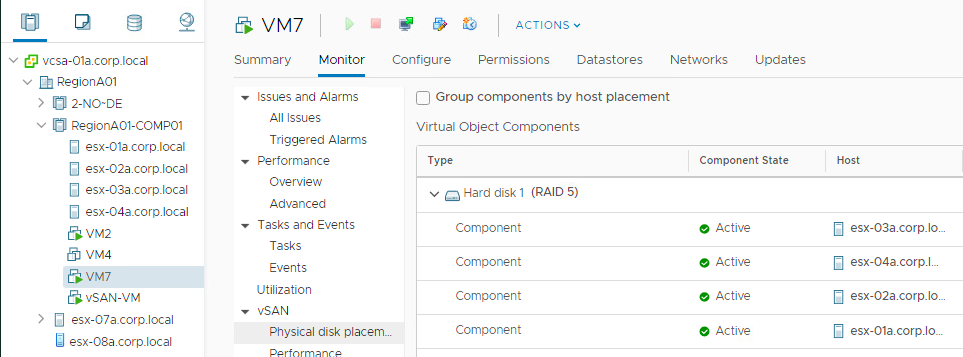

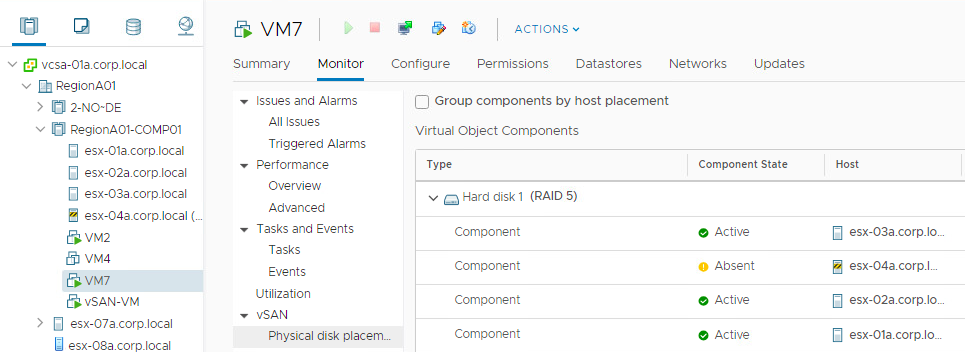

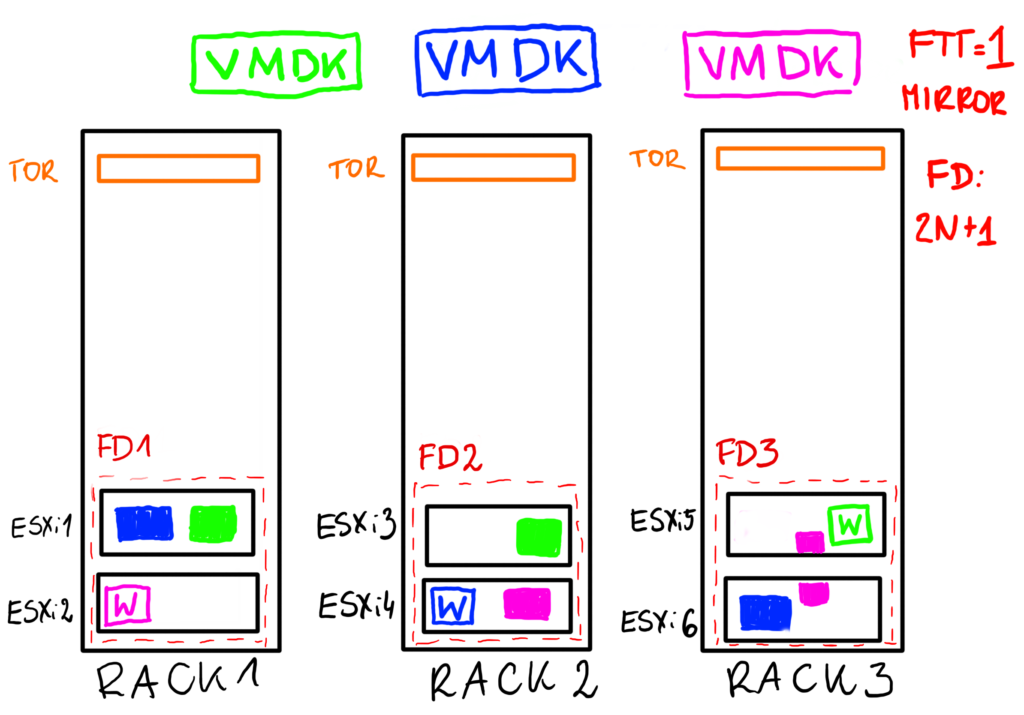

Example 2

Here is another example. The smallest number of FDs is 3, so let’s imagine that this time we have 3 racks and 6 ESXi hosts. We could have two hosts per rack. FD=3 is a protection against a single rack failure. Any of the racks can fail and we will still have enough components for our objects to function. Remember, that we need to fix the issue of the faulty rack sooner or later because otherwise vSAN will not be able to rebuild a missing fault domain.

With 3 racks we could use FTT=1 mirror policy. Components are spread equally among FDs. There is no “witness” rack, witness metadata for every object can be on the same or different rack than other objects.

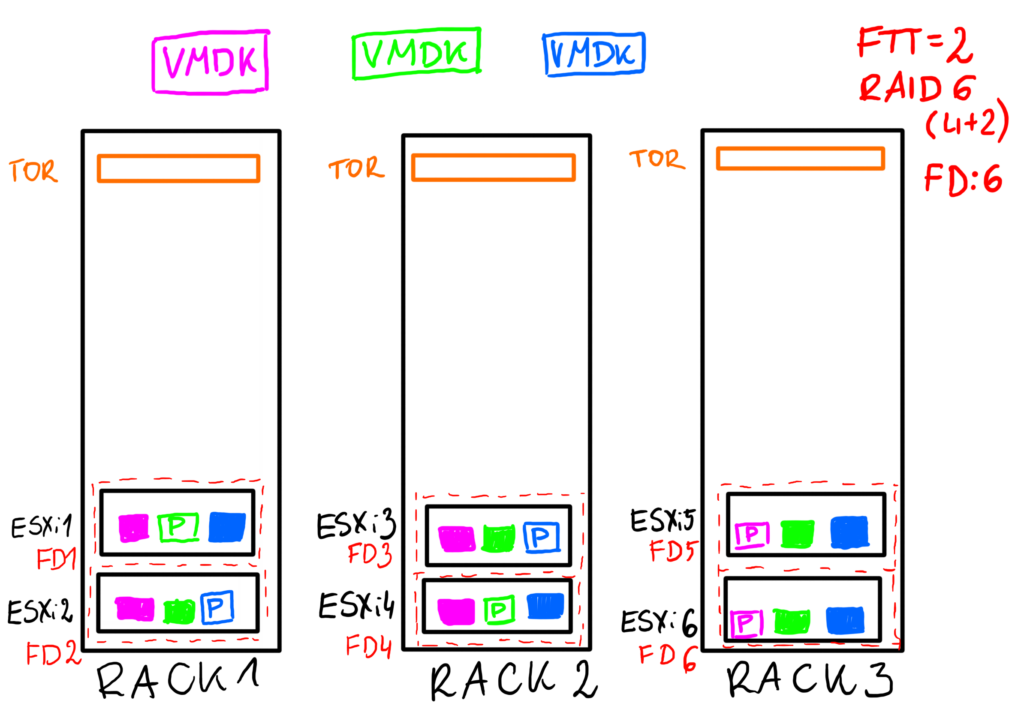

Example 3

With 3 racks we could potentially use FTT=2 policy with RAID-6 (4x data + 2 x parity per data block, please note that there is no ‘parity’ component, it is here just for simplification) and with minimum of 6 hosts still be able to protect the cluster against a rack failure. It is a little bit tricky. Imagine we still have those 6 hosts from Example 2 and this time every host is a FD. The loss of a single rack equals 2 host failures and this is the max RAID-6 can protect us against.

Example 4

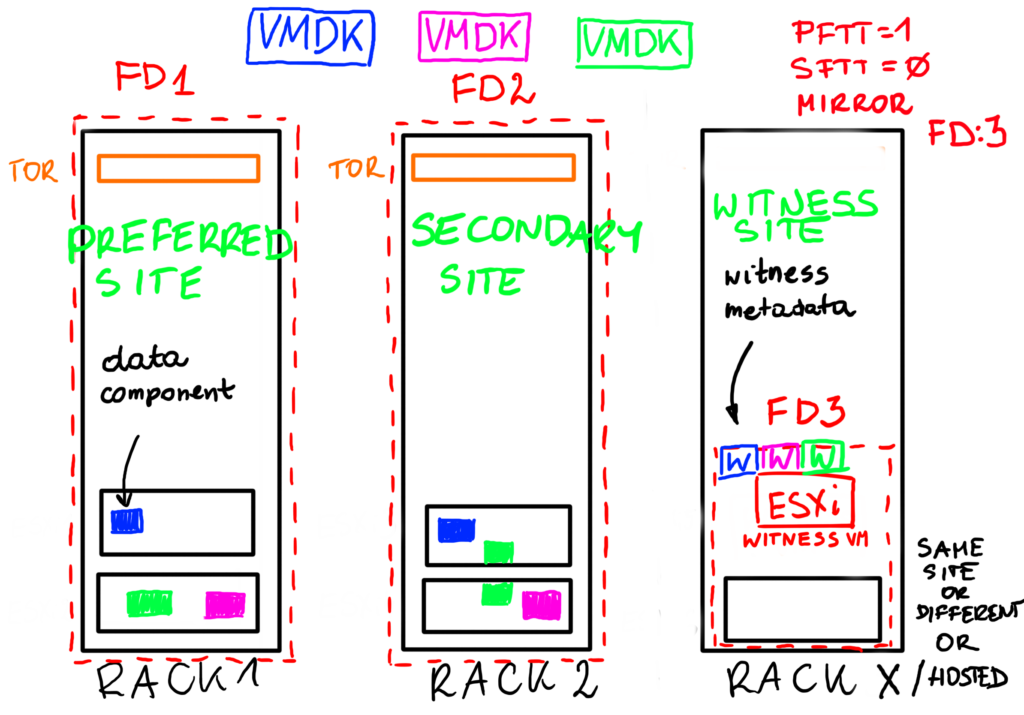

It would be great if we could build an environment that consists of a minimum of 2 racks that survives a failure of a single rack. But this is not possible, such an approach would require some kind of an arbiter to protect against a split brain scenario.

But there IS a way to do this using 2 racks, assuming witness host is our arbiter and it is hosted somewhere outside or on a standalone tower host that does not require a third rack ;-).

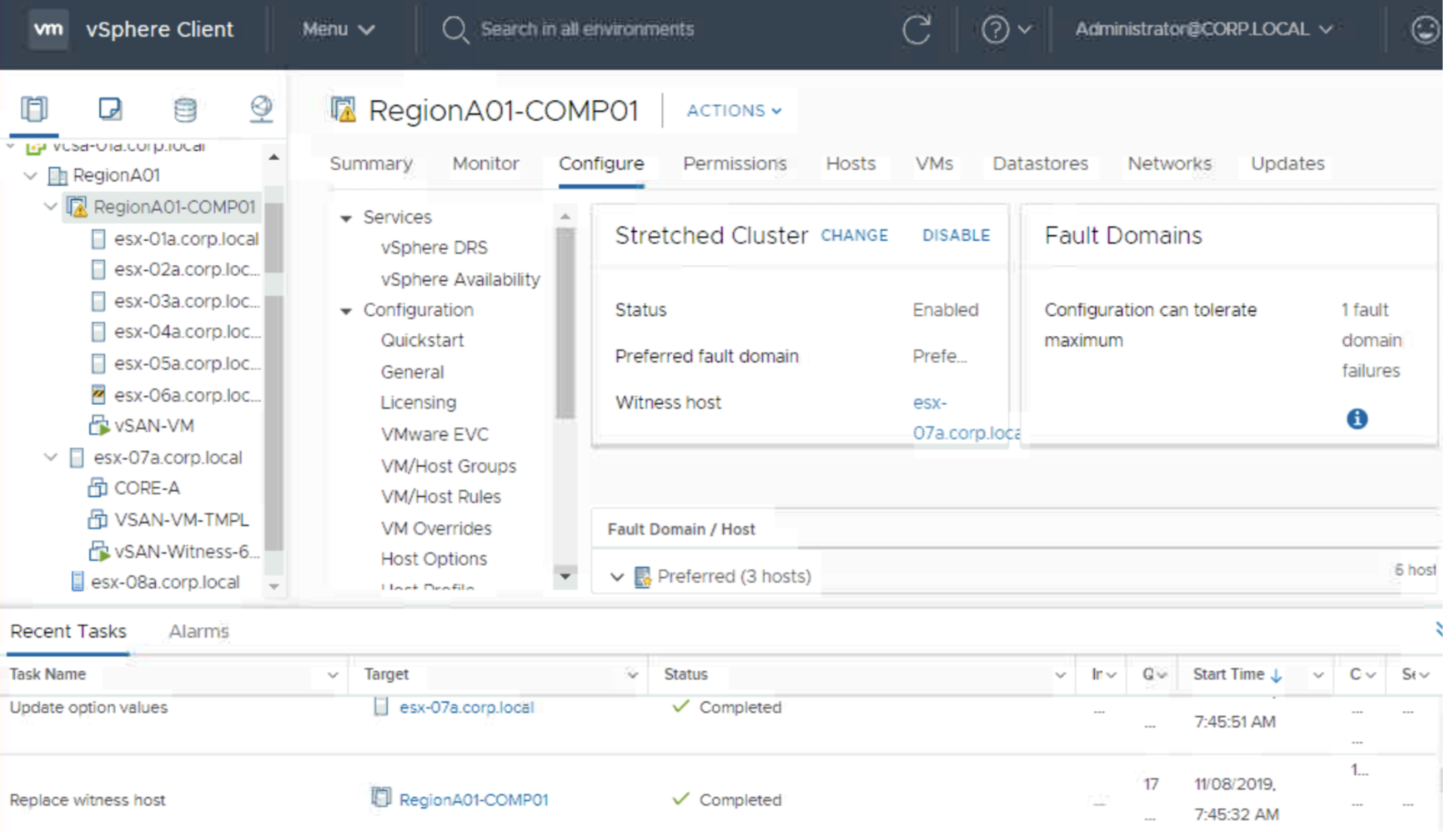

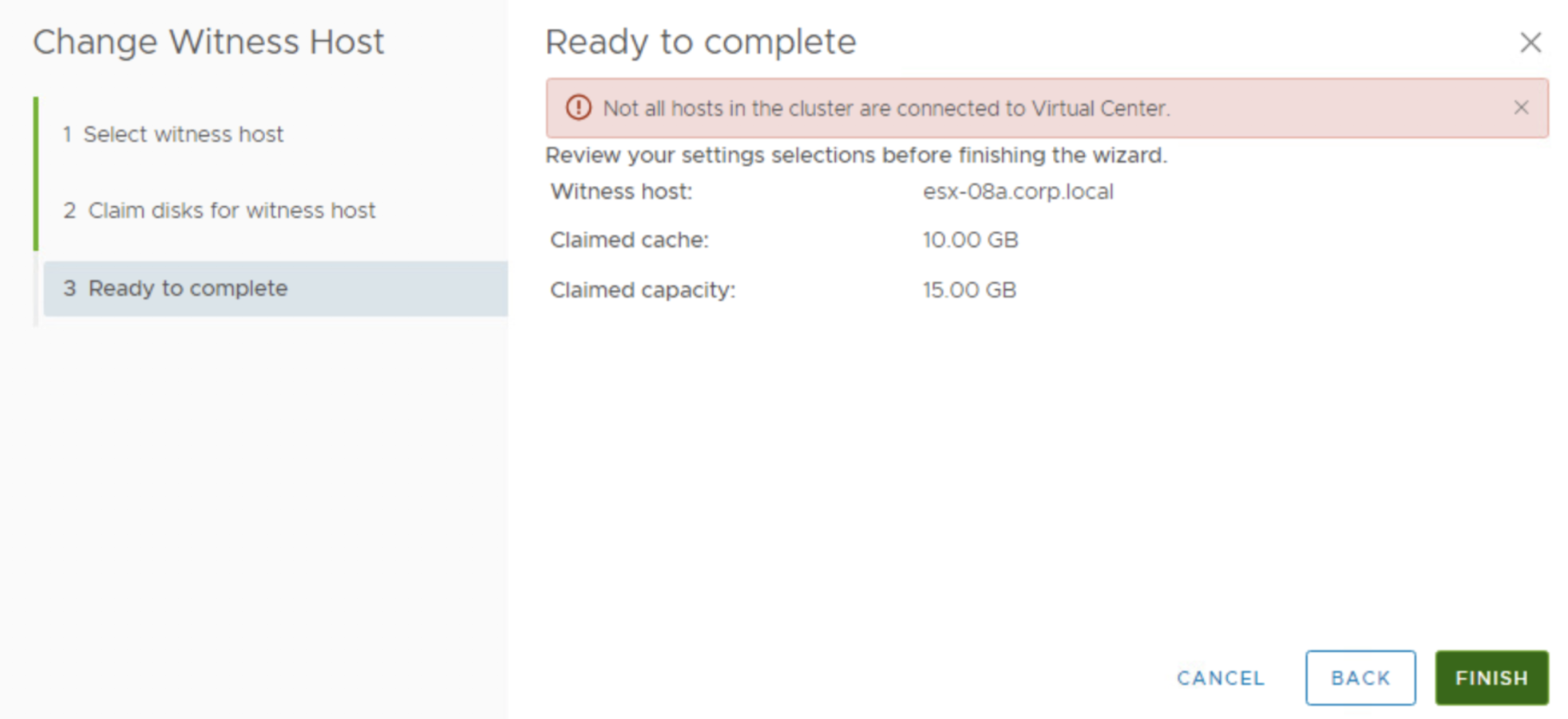

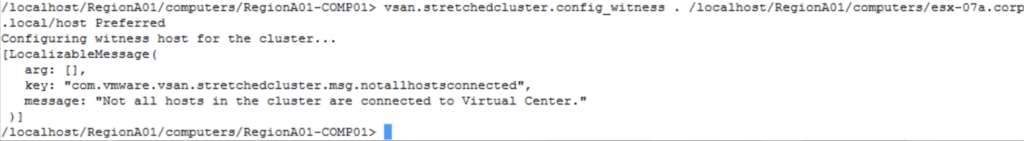

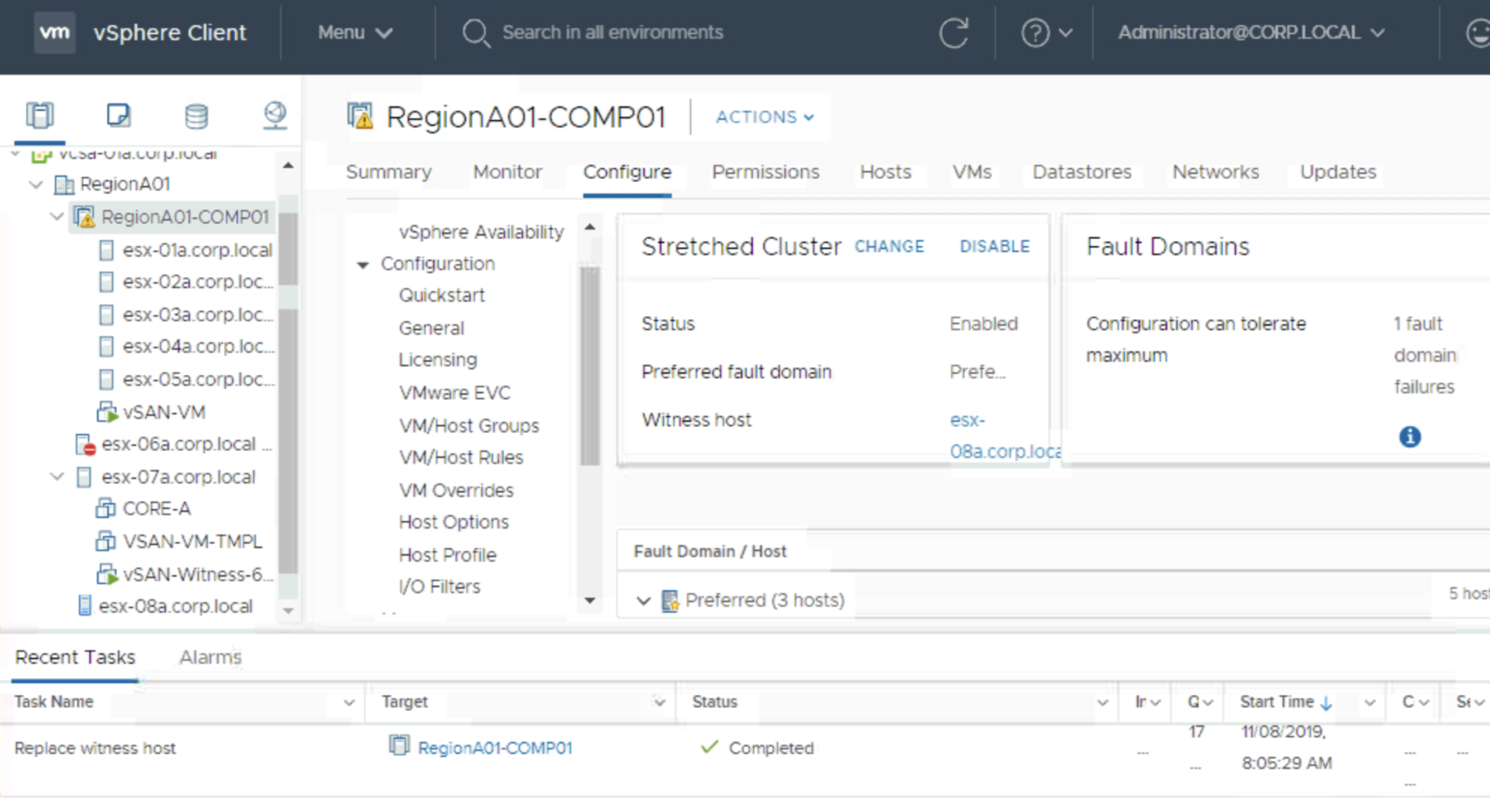

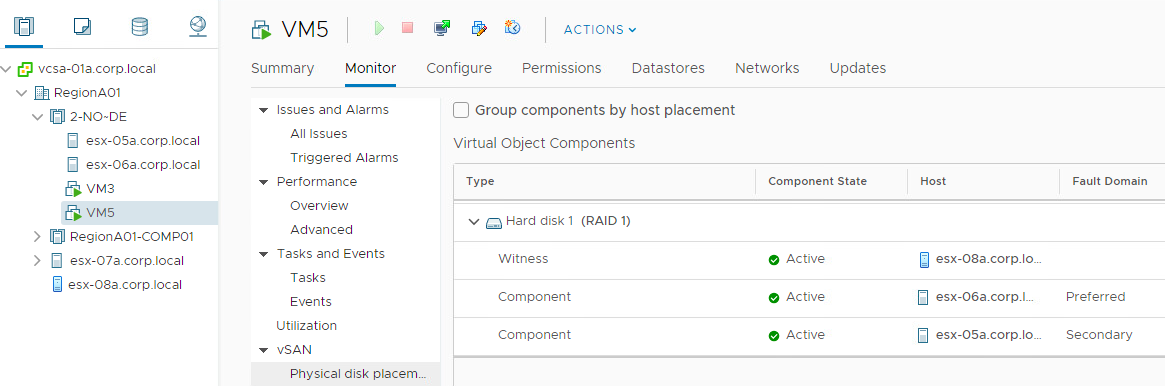

Yes, we can use Stretched Cluster concept to introduce a protection against single rack failure in the following way:

- FD1 – Preferred Site (Rack 1)

- FD2 – Secondary Site (Rack 2)

- FD3 – Witness Site (outside, hosted or Rack 3)

In this case vSAN will think Rack 1 is for example a Preferred Site and Rack 2 is a Secondary Site and will use Primary Failures To Tolerate of 1 to mirror components between those „sites”. Racks may stand next to each other or they may be placed in different buildings (5ms of RTT is required). We could even use Secondary Failures to Tolerate to further protect our objects also inside a rack if we have enough hosts to do so.

In case of a Stretched Cluster we need a Witess hosts to protect the cluster against a split brain. Witness can be hosted in another rack or in another data center. Everywhere accept for Rack 1 and Rack 2.